HTML

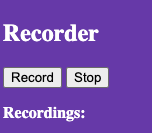

First we have the controls. We have a button to record, and stop the recording.

|

1 2 |

<button id="recordButton">Record</button> <button id="stopButton">Stop</button> |

Then we have a recordingsList list tag to hold all the recordings.

|

1 2 |

<p><strong>Recordings:</strong></p> <ol id="recordingsList"></ol> |

Recorder.js

We create a new AudioContext, which we use it as a parameter in Recorder class.

We must implement the onAnalysed callback handler.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

const audioContext = new (window.AudioContext || window.webkitAudioContext)(); const recorder = new Recorder(audioContext, { // An array of 255 Numbers // You can use this to visualize the audio stream // If you use react, check out react-wave-stream onAnalysed: data => { // console.log(data) } }); let isRecording = false; let myBlob = null; navigator.mediaDevices.getUserMedia({audio: true}) .then(stream => recorder.init(stream)) .catch(err => console.log('Uh oh... unable to get stream...', err)); |

Only then we can start to use the recorder

start record handler

|

1 2 3 4 |

function startRecording() { recorder.start() .then(() => isRecording = true); } |

stop record handler

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

function stopRecording() { recorder.stop() .then(({blob, buffer}) => { myBlob = blob; var recordingsList = document.getElementById("recordingsList"); var url = URL.createObjectURL(myBlob); var au = document.createElement('audio'); au.controls = true; au.src = url; var li = document.createElement('li'); // create the list item li.appendChild(au); //add the new audio element to li li.appendChild(document.createTextNode(" "))//add a space in between //add the li element to the ol recordingsList.appendChild(li); }); } |

Assign handlers to event listeners.

|

1 2 3 4 |

var recordButton = document.getElementById("recordButton"); var stopButton = document.getElementById("stopButton"); recordButton.addEventListener("click", startRecording); stopButton.addEventListener("click", stopRecording); |