Not blocking the UI with FMDB Queue

So notifications are coming in rapidly…each one is a thread, and the threads’s data are getting inserted into your FMDB. Your FMDB ensures thread safety because FMDB’s queue uses dispatch_sync:

FMDatabaseQueue.m

|

1 2 3 4 5 6 7 8 9 |

- (void)inDatabase:(void (^)(FMDatabase *db))block { dispatch_sync(_queue, ^() { FMDatabase *db = [self database]; block(db); ... ... }); |

It gets the block of database code you pass in, and puts it on a SERIAL QUEUE.

The dispatch_sync used here is not a tool for getting concurrent execution, it is a tool for temporarily limiting it for safety.

The SERIAL QUEUE ensures safety by having each block line up one after another, and start their execution ONLY AFTER the previous task has finished executing. This ensures that you are thread safe because they are not writing over each other at the same time.

However there is a problem. Let’s say your main thread is processing a for loop with the inDatabase method. The main thread places the block on FMDB Queue’s serial queue. This means the dispatch_sync that FMDB Queue is using will block your main thread as it processes each task. By definition, dispatch_sync DOES NOT RETURN, until after it has finished its executing task.

Proving FMDB does block

We need to prove that FMDB does indeed block our UI so we first put a UISlider in ViewController for testing purposes. If we are concurrently processing all these incoming notifications in the background, then this UISlider should be responsive.

You put a slider on your UI like so:

|

1 2 3 4 5 6 7 8 9 10 |

UISlider *slider = [[UISlider alloc] initWithFrame:frame]; [slider addTarget:self action:@selector(sliderAction:) forControlEvents:UIControlEventValueChanged]; [slider setBackgroundColor:[UIColor redColor]]; slider.minimumValue = 0.0; slider.maximumValue = 50.0; slider.continuous = YES; slider.value = 25.0; [self.view addSubview:slider]; |

When you run a simple for loop outside of method say executeUpdateOnDBwithSQLParams:, you are essentially adding a dispatch_sync on your main thread. This will block, and your UI will NOT be responsive.

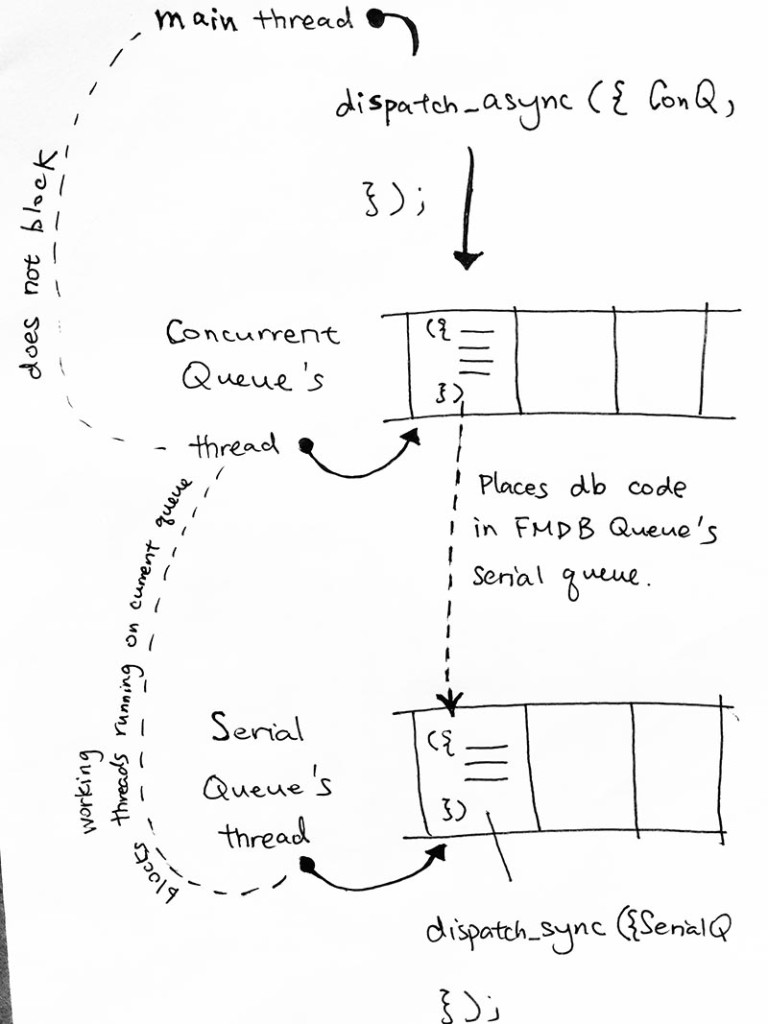

In order to solve this, we do 2 things:

- Use a concurrent queue and have main thread work on it to ensure concurrency and that the UI is not blocked

- Inside of that concurrent queue, we queue up db jobs to FMDB’s thread safe serial queue

Solution

dispatch_sync does not return until its task is finished. Thus, while the task is executing, the main queue can’t do anything because the dispatch_sync has not returned. That’s the just of the issue.

What we did to solve this issue is to

dispatch_async FMDB tasks on a concurrent queue.

This is the basic setup that enables fmdb to be non-blocking.

1) We set up a block on a concurrent queue first. This ensures that whatever runs inside of that concurrent block will be able to run

concurrently with the main thread.

2) The block starts off with executing its log. Then the PRE-TASK. Then it syncs its own block of the “DB Task”. This sync means that it blocks whatever is trying run with it, on conQueue. Hence, that’s why POST-TASK will run after DB task.

3) Finally, after PRE-TASK, then DB task, finish running, POST-TASK runs.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

// note that conQueue is a concurrent Queue // queue is a serial queue dispatch_async(conQueue, ^{ NSLog(@"--- start block (concurrent queue) ---"); [self taskName:@"PRE-TASK on concurrent queue" countTo:3 sleepFor:1.0f]; dispatch_sync(queue, ^{ //queue up a task on queueA NSLog(@"--- start block (sync queue) ---"); //must finish this line [self taskName:@"DB task" countTo:10 sleepFor:1.0f]; NSLog(@"--- end block (sync queue) ---"); }); [self taskName:@"POST-TASK on concurrent queue" countTo:3 sleepFor:1.0f]; NSLog(@"--- end block (concurrent queue) ---"); }); |

— start block (concurrent queue) —

— Task PRE-TASK on concurrent queue start —

PRE-TASK on concurrent queue – 0

PRE-TASK on concurrent queue – 1

PRE-TASK on concurrent queue – 2

^^^ Task PRE-TASK on concurrent queue END ^^^

— start block (sync queue) —

— Task DB task start —

DB task – 0

DB task – 1

DB task – 2

DB task – 3

DB task – 4

DB task – 5

DB task – 6

DB task – 7

DB task – 8

DB task – 9

^^^ Task DB task END ^^^

— end block (sync queue) —

— Task POST-TASK on concurrent queue start —

POST-TASK on concurrent queue – 0

POST-TASK on concurrent queue – 1

POST-TASK on concurrent queue – 2

^^^ Task POST-TASK on concurrent queue END ^^^

— end block (concurrent queue) —

The dispatch_sync is to simulate FMDB like so:

|

1 2 3 4 5 6 7 8 9 |

- (void)inDatabase:(void (^)(FMDatabase *db))block { dispatch_sync(_queue, ^() { FMDatabase *db = [self database]; block(db); ... ... }); |

So BOTH tasks

- dispatch_sync is running its db tasks (fmdb)

- free to move your UI (main queue)

are processing on the concurrent queue via dispatch_async.

Thus, that’s how you get FMDB to be non-blocking.

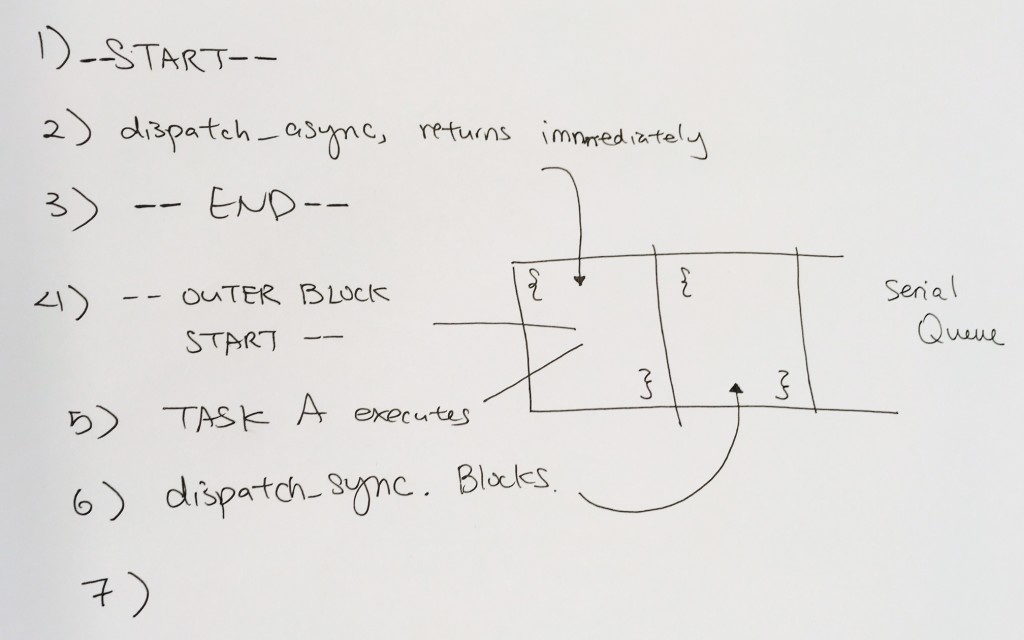

Tidbit: changing dispatch_async to dispatch_sync on the concurrent queue

If you were to change from dispatch_async to dispatch_sync on the concurrent queue “conQueue”, it will block the main queue

when it first starts up because by definition, dispatch_sync means it does not return right away. It will return later

when it runs to the end, but for now, it blocks and you’re not able to move your UI.

Thus, it runs to PRE-TASK, and executes that.

Then it moves down, and runs the “DB task” block via dispatch_async on serial queue “queue”.

The dispatch_async returns immediately, starts executing “DB task” on serial queue “queue”, and then it executes

POST-TASK. Thus, DB task and POST-TASK will be executing together.

After POST-TASK finishes, our concurrent block has ran to the end, and returns (due to dispatch_sync).

At this point, you will be able to move the UI. “DB task” continues its execution because its part of the task that’s still sitting on concurrent queue “conQueue”.

Since its a concurrent queue, it will be processing this task that’s still sitting on its queue, and you will be able to move around the UI because nothing is blocking anymore.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

dispatch_sync(conQueue, ^{ NSLog(@"--- start block (concurrent queue) ---"); [self taskName:@"PRE-TASK on concurrent queue" countTo:3 sleepFor:1.0f]; dispatch_async(queue, ^{ //queue up a task on queueA NSLog(@"--- start block (sync queue) ---"); //must finish this line [self taskName:@"DB task" countTo:10 sleepFor:1.0f]; NSLog(@"--- end block (sync queue) ---"); }); [self taskName:@"POST-TASK on concurrent queue" countTo:3 sleepFor:1.0f]; NSLog(@"--- end block (concurrent queue) ---"); }); |

Other details when you have time

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

-(void)insertPortfolioWithPfNum:(NSString*)portfolioNum performance:(NSString*)performance aum:(NSString*)aum ccy:(NSString*)currency chartJSONString:(NSString*)json andTime:(NSString*)time { dispatch_async(concurrencyQueue, ^{ NSLog(@"inserting portoflio %@", portfolioNum); [self executeUpdateOnDBwithSQLParams: INSERT_INTO_PORTFOLIOS, @"portfolio", portfolioNum, performance, aum, currency, json, time]; }); } |

where concurrencyQueue is created and initialized like so:

|

1 2 3 4 5 6 7 8 9 10 11 12 |

static dispatch_queue_t concurrencyQueue; //concurrency queue @interface DatabaseFunctions() {} @end -(instancetype)init { ... ... concurrencyQueue = dispatch_queue_create("com.epam.halo.queue", DISPATCH_QUEUE_CONCURRENT); ... ... } |

But what about the database writing that is dispatch_sync on the serial queue? Wouldn’t that block?

No. The dispatch_sync on the serial queue only blocks against thread(s) that touches FMDB Queue’s serial queue. In this case, it would be FMDBQueue’s serial queue’s own thread, and the concurrent queue’s thread.

max of 64 threads running concurrently

ref – http://stackoverflow.com/questions/34849078/main-thread-does-dispatch-async-on-a-concurrent-queue-in-viewdidload-or-within

Note that when you are using custom concurrent queues, you are only allowed to use 64 concurrent working threads at once. Hence, that’s why when your main thread calls on the concurrent queue and queue up tasks, the system starts blocking your UI after 64 tasks on the queue.

The workaround is to put the task of placing db tasks onto the concurrent queue onto your main queue like so:

|

1 2 3 4 5 6 7 8 9 10 |

-(void)run_async_with_UI:(void (^)(void)) task { dispatch_async(dispatch_get_main_queue(), ^() { dispatch_async(concurrencyQueue, ^{ task(); }); }); } |

Then simply call the utility method run_async_with_UI and place your database calls in there.

Proof of concept

The dispatch_sync(serialQueue,….) is essentially the FMDB Queue.

We just added dispatch_async(concurrencyQueue…). Now, you can see that we are manipulating the database in a thread-safe manner, in the background, without clogging up the UI.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

for ( int i = 0; i < 30; i++) { dispatch_async(concurrencyQueue, ^() { NSLog(@"concurrentQueue inserts task %d <--", i); //dispatch_sync function WILL NOT CONTINUE enqueueing further tasks until //this block has been executed //won't return until this block is finished //hence its blocks dispatch_sync(serialQueue, ^() { //this is to simulate writing to database NSLog(@"serialQueue - START %d---------", i); [NSThread sleepForTimeInterval:8.0f]; NSLog(@"serialQueue - FINISHED %d--------", i); }); NSLog(@"concurrentQueue END task %d -->", i); }); } |

result:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

concurrentQueue inserts task 1 <-- 2016-08-17 10:58:48.477 trash[6381:704826] concurrentQueue inserts task 0 <-- 2016-08-17 10:58:48.477 trash[6381:704838] concurrentQueue inserts task 3 <-- 2016-08-17 10:58:48.477 trash[6381:704830] concurrentQueue inserts task 2 <-- 2016-08-17 10:58:48.478 trash[6381:704816] serialQueue - START 1--------- 2016-08-17 10:58:48.478 trash[6381:704839] concurrentQueue inserts task 4 <-- 2016-08-17 10:58:48.478 trash[6381:704840] concurrentQueue inserts task 5 <-- 2016-08-17 10:58:48.478 trash[6381:704841] concurrentQueue inserts task 6 <-- 2016-08-17 10:58:48.479 trash[6381:704842] concurrentQueue inserts task 7 <-- 2016-08-17 10:58:48.479 trash[6381:704843] concurrentQueue inserts task 8 <-- 2016-08-17 10:58:48.479 trash[6381:704844] concurrentQueue inserts task 9 <-- 2016-08-17 10:58:48.480 trash[6381:704845] concurrentQueue inserts task 10 <-- ... ... |

So, the dispatch_async throws all the tasks onto the concurrent queue without returning (aka blocking). That’s why all the task blocks log “concurrentQueue inserts task n”.

The task the dispatch_async throws onto the serialQueue will start executing immediately via dispatch_sync. Dispatch_sync by definition means it won’t return until its block has finished executing. Hence, this means that “concurrentQueue’s END task n” message won’t be logged until after the block on serialQueue has been executed”.

Notice how serialQueue FINISHED 1, then concurrentQueue logs END task 1.

serialQueue FINISHED 0, then concurrentQueue END task 0…

its because dispatch_sync does not return until it has finished executing.

Once it returns, it continues onto the “concurrentQueue END task n” log message.

In other words, due to dispatch_sync, line 10-16 must be run, before line 20 is run.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

ncurrentQueue inserts task 27 <-- 2016-08-17 14:12:34.938 trash[6685:772616] concurrentQueue inserts task 28 <-- 2016-08-17 14:12:34.938 trash[6685:772617] concurrentQueue inserts task 29 <-- 2016-08-17 14:12:42.933 trash[6685:772590] serialQueue - FINISHED 2-------- 2016-08-17 14:12:42.933 trash[6685:772590] concurrentQueue END task 2 --> 2016-08-17 14:12:42.933 trash[6685:772558] serialQueue - START 0--------- 2016-08-17 14:12:50.933 trash[6685:772558] serialQueue - FINISHED 0-------- 2016-08-17 14:12:50.933 trash[6685:772558] concurrentQueue END task 0 --> 2016-08-17 14:12:50.933 trash[6685:772570] serialQueue - START 1--------- 2016-08-17 14:12:58.938 trash[6685:772570] serialQueue - FINISHED 1-------- 2016-08-17 14:12:58.938 trash[6685:772591] serialQueue - START 3--------- 2016-08-17 14:12:58.938 trash[6685:772570] concurrentQueue END task 1 --> 2016-08-17 14:13:06.943 trash[6685:772591] serialQueue - FINISHED 3-------- 2016-08-17 14:13:06.944 trash[6685:772591] concurrentQueue END task 3 --> 2016-08-17 14:13:06.944 trash[6685:772592] serialQueue - START 4--------- 2016-08-17 14:13:14.947 trash[6685:772592] serialQueue - FINISHED 4-------- 2016-08-17 14:13:14.947 trash[6685:772592] concurrentQueue END task 4 --> 2016-08-17 14:13:14.947 trash[6685:772593] serialQueue - START 5--------- 2016-08-17 14:13:22.952 trash[6685:772593] serialQueue - FINISHED 5-------- 2016-08-17 14:13:22.953 trash[6685:772593] concurrentQueue END task 5 --> 2016-08-17 14:13:22.953 trash[6685:772594] serialQueue - START 6--------- 2016-08-17 14:13:30.958 trash[6685:772594] serialQueue - FINISHED 6-------- 2016-08-17 14:13:30.958 trash[6685:772594] concurrentQueue END task 6 --> 2016-08-17 14:13:30.958 trash[6685:772595] serialQueue - START 7--------- 2016-08-17 14:13:38.963 trash[6685:772595] serialQueue - FINISHED 7-------- 2016-08-17 14:13:38.963 trash[6685:772595] concurrentQueue END task 7 --> 2016-08-17 14:13:38.963 trash[6685:772596] serialQueue - START 8--------- 2016-08-17 14:13:46.965 trash[6685:772596] serialQueue - FINISHED 8-------- |

Another important note is that notice serialQueue has started to execute. But by definition, dispatch_sync blocks and does not return until the current executing task is finished…so how does concurrentQueue keeps inserting?

The reason is that the blocks on serialQueue is running in the background. The dispatch_sync that’s not returning happens in the background, and thus, does not affect the UI. The enqueueing of the “db simulate write” onto the serialQueue is done on the background queue concurrentQueue.

Say we switch it

So now we dispatch_sync a block onto a queue, it will not return until it finishes enqueueing. The key point here is that “due to dispatch_async throwing the task onto the serialQueue and returning immediately”, enqueueing will be:

lots of fast enqueueing of blocks onto the concurrent queue, thus, logging of

line 5, and line 20.

example:

1. block task 1 goes onto concurrent queue via dispatch_sync, WILL NOT RETURN UNTIL WHOLE TASK BLOCK IS FINISHED

2. “simulate DB write” task block goes onto serial Queue via dispatch_async, RETURNS RIGHT AWAY.

3. block task 1 finished, thus RETURNS control to concurrent queue.

4. block task 2 goes onto concurrent queue via dispatch_sync, WILL NOT RETURN UNTIL WHOLE TASK BLOCK IS FINISHED

5. “simulate DB write” task block goes onto serial Queue via dispatch_async, RETURNS RIGHT AWAY.

6. block task 2 finished, thus RETURNS control to concurrent queue.

…

…

etc.

continues until the serialQueue, being a background queue, starts processing its first block. Hence it will display:

serialQueue – START 0———

serialQueue – FINISHED 0——–

Hence, the situation is that all the tasks of putting “simulate write tasks onto the serial Queue” are enqueued onto the concurrent queue quickly.

Then, when the serial Queue decides to execute its first task, thats’ when it does its first “DB write simulate”. This DB write simulate does not block UI because its being done in the background.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

for ( int i = 0; i < 30; i++) { dispatch_sync(concurrencyQueue, ^() { NSLog(@"concurrentQueue inserts task %d <--", i); //dispatch_sync function WILL NOT CONTINUE enqueueing further tasks until //this block has been executed //won't return until this block is finished //hence its blocks dispatch_async(serialQueue, ^() { //this is to simulate writing to database NSLog(@"serialQueue - START %d---------", i); [NSThread sleepForTimeInterval:8.0f]; NSLog(@"serialQueue - FINISHED %d--------", i); }); NSLog(@"concurrentQueue END task %d -->", i); }); } |

result:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

concurrentQueue inserts task 0 <-- 2016-08-17 11:35:30.898 trash[6447:722065] concurrentQueue END task 0 --> 2016-08-17 11:35:30.898 trash[6447:722150] serialQueue - START 0--------- 2016-08-17 11:35:30.898 trash[6447:722065] concurrentQueue inserts task 1 <-- 2016-08-17 11:35:30.898 trash[6447:722065] concurrentQueue END task 1 --> 2016-08-17 11:35:30.898 trash[6447:722065] concurrentQueue inserts task 2 <-- 2016-08-17 11:35:30.898 trash[6447:722065] concurrentQueue END task 2 --> 2016-08-17 11:35:30.899 trash[6447:722065] concurrentQueue inserts task 3 <-- 2016-08-17 11:35:30.899 trash[6447:722065] concurrentQueue END task 3 --> 2016-08-17 11:35:30.899 trash[6447:722065] concurrentQueue inserts task 4 <-- 2016-08-17 11:35:30.899 trash[6447:722065] concurrentQueue END task 4 --> 2016-08-17 11:35:30.899 trash[6447:722065] concurrentQueue inserts task 5 <-- 2016-08-17 11:35:30.899 trash[6447:722065] concurrentQueue END task 5 --> 2016-08-17 11:35:30.899 trash[6447:722065] concurrentQueue inserts task 6 <-- 2016-08-17 11:35:30.899 trash[6447:722065] concurrentQueue END task 6 --> 2016-08-17 11:35:30.900 trash[6447:722065] concurrentQueue inserts task 7 <-- 2016-08-17 11:35:30.900 trash[6447:722065] concurrentQueue END task 7 --> 2016-08-17 11:35:30.900 trash[6447:722065] concurrentQueue inserts task 8 <-- 2016-08-17 11:35:30.900 trash[6447:722065] concurrentQueue END task 8 --> 2016-08-17 11:35:30.900 trash[6447:722065] concurrentQueue inserts task 9 <-- 2016-08-17 11:35:30.900 trash[6447:722065] concurrentQueue END task 9 --> 2016-08-17 11:35:30.900 trash[6447:722065] concurrentQueue inserts task 10 <-- 2016-08-17 11:35:30.901 trash[6447:722065] concurrentQueue END task 10 --> 2016-08-17 11:35:30.901 trash[6447:722065] concurrentQueue inserts task 11 <-- 2016-08-17 11:35:30.901 trash[6447:722065] concurrentQueue END task 11 --> 2016-08-17 11:35:30.901 trash[6447:722065] concurrentQueue inserts task 12 <-- 2016-08-17 11:35:30.901 trash[6447:722065] concurrentQueue END task 12 --> 2016-08-17 11:35:30.901 trash[6447:722065] concurrentQueue inserts task 13 <-- |

Then after all the tasks are being enqueued onto the concurrent queue…the serialQueue processing task one by one.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

concurrentQueue inserts task 28 <-- 2016-08-17 11:35:30.905 trash[6447:722065] concurrentQueue END task 28 --> 2016-08-17 11:35:30.905 trash[6447:722065] concurrentQueue inserts task 29 <-- 2016-08-17 11:35:30.906 trash[6447:722065] concurrentQueue END task 29 --> 2016-08-17 11:35:38.900 trash[6447:722150] serialQueue - FINISHED 0-------- 2016-08-17 11:35:38.900 trash[6447:722150] serialQueue - START 1--------- 2016-08-17 11:35:46.904 trash[6447:722150] serialQueue - FINISHED 1-------- 2016-08-17 11:35:46.904 trash[6447:722150] serialQueue - START 2--------- 2016-08-17 11:35:54.908 trash[6447:722150] serialQueue - FINISHED 2-------- 2016-08-17 11:35:54.908 trash[6447:722150] serialQueue - START 3--------- 2016-08-17 11:36:02.910 trash[6447:722150] serialQueue - FINISHED 3-------- 2016-08-17 11:36:02.910 trash[6447:722150] serialQueue - START 4--------- 2016-08-17 11:36:10.915 trash[6447:722150] serialQueue - FINISHED 4-------- 2016-08-17 11:36:10.916 trash[6447:722150] serialQueue - START 5--------- 2016-08-17 11:36:18.921 trash[6447:722150] serialQueue - FINISHED 5-------- 2016-08-17 11:36:18.921 trash[6447:722150] serialQueue - START 6--------- 2016-08-17 11:36:26.926 trash[6447:722150] serialQueue - FINISHED 6-------- 2016-08-17 11:36:26.926 trash[6447:722150] serialQueue - START 7--------- 2016-08-17 11:36:34.930 trash[6447:722150] serialQueue - FINISHED 7-------- |