ref: http://stackoverflow.com/questions/4360591/help-with-multi-threading-on-ios

The main reason why you want to use concurrent or serial queues over the main queue is to run tasks in the background.

Sync on a Serial Queue

dispatch_sync –

1) dispatch_sync means that the block is enqueued and will NOT continue enqueueing further tasks UNTIL the current task has been executed.

2) dispatch_sync is a blocking operation. It DOES NOT RETURN until its current task has been executed.

|

1 2 3 4 5 6 7 8 9 |

NSLog(@"--- START ---"); // 1 dispatch_sync(serialQueue2, ^{ // 2 NSLog(@"-- dispatch start --"); //3 [self taskName:@"TASK B" countTo:10 sleepFor:1.0f]; NSLog(@"-- dispatch end --"); }); NSLog(@"--- END ---"); //4 |

1) prints START

2) puts the block of code onto serialQueue2, then blocks. aka does not return

3) the block of code executes

4) When the block of code finishes, the dispatch_sync then returns, and we move on to the next instruction, which is prints END

output:

— START —

— dispatch start —

— Task TASK B start —

TASK B – 0

TASK B – 1

…

…

TASK B – 8

TASK B – 9

^^^ Task TASK B END ^^^

— dispatch end —

— END —

Due to the dispatch_sync not returning immediately, it blocks the main queue also. Thus, try playing around with your UISlider.

It is not responsive.

dispatch_async means that the block is enqueued and RETURN IMMEDIATELY, letting next commands execute, as well as the main thread process.

ASYNC on a Serial Queue

Let’s start with a very simple example:

|

1 2 3 4 5 6 7 8 9 10 11 |

NSLog(@"--- START ---"); //1 dispatch_async(serialQueue2, ^{ //2 NSLog(@"-- dispatch start --"); //4 [self taskName:@"TASK B" countTo:10 sleepFor:1.0f]; NSLog(@"-- dispatch end --"); }); NSLog(@"--- END ---"); // 3 |

This means:

1) prints START

2) we dispatch a block onto serialQueue2, then return control immediately.

3) Because dispatch_async returns immediately, we can continues down to the next instruction, which is prints END

4) the dispatched block starts executing

— START —

— END —

— dispatch start —

— Task TASK B start —

TASK B – 0

…

…

TASK B – 9

^^^ Task TASK B END ^^^

— dispatch end —

If you look at your UISlider, it is still responsive.

Slider code

|

1 2 3 4 5 6 7 8 9 10 11 12 |

UISlider * slider = [[UISlider alloc] initWithFrame:CGRectMake(80.0f, 360.0f, self.view.bounds.size.width/2, 8.0f)]; slider.backgroundColor = [UIColor clearColor]; slider.minimumValue = 0.0f; slider.maximumValue = 100.0f; slider.continuous = YES; slider.value = 75.0f; [slider addTarget:self action:@selector(updateValue:) forControlEvents:UIControlEventValueChanged]; [self.view addSubview:slider]; |

sync-ed

dispatch_sync means that the block is enqueued and will NOT continue enqueueing further tasks UNTIL the current task has been executed.

Now let’s dispatch the first task (printing numerics) in a sync-ed fashion. This means that we put the task on the queue. Then while the queue is processing that task, it WILL NOT queue further tasks until this current task is finished.

The sleep call block other thread(s)/queue(s) from executing. Only when we finish printing out all the numerics, does the queue move on to execute the next task, which is printing out the alphabets.

Let’s throw a button in there, and have it display numbers. We’ll do one dispatch_sync first.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 |

- (void)viewDidLoad { [super viewDidLoad]; // Do any additional setup after loading the view, typically from a nib. UISlider * slider = [[UISlider alloc] initWithFrame:CGRectMake(80.0f, 360.0f, self.view.bounds.size.width/2, 8.0f)]; slider.backgroundColor = [UIColor clearColor]; slider.minimumValue = 0.0f; slider.maximumValue = 100.0f; slider.continuous = YES; slider.value = 75.0f; [slider addTarget:self action:@selector(updateValue:) forControlEvents:UIControlEventValueChanged]; [self.view addSubview:slider]; UIButton *button = [UIButton buttonWithType:UIButtonTypeCustom]; [button addTarget:self action:@selector(aMethod) forControlEvents:UIControlEventTouchUpInside]; [button setTitle:@"Show View" forState:UIControlStateNormal]; [button setBackgroundColor:[UIColor redColor]]; button.frame = CGRectMake(80.0, 210.0, 160.0, 40.0); [self.view addSubview:button]; } -(void)aMethod { //global queue runs concurrently dispatch_queue_t queueA = dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_DEFAULT, 0ul); dispatch_sync(queueA, ^{ //queue up a task on queueA //task start [self taskName:@"TASK B" countTo:20 sleepFor:2.0f]; //task end }); } |

You’ll notice that the slider is unresponsive. That’s because by definition dispatch_sync blocks other threads/queues (including main queue from processing) until our dispatch_sync’s block has been executed. It does return until it has finished its own task.

Async, Sync, on Concurrent Queue

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

dispatch_queue_t queueA = dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_DEFAULT, 0ul); NSLog(@"-- START --"); dispatch_async(queueA, ^{ //queue up a task on queueA NSLog(@"=== A start ==="); [self taskName:@"TASK A" countTo:8 sleepFor:1.0f]; NSLog(@"=== A end ==="); }); dispatch_sync(queueA, ^{ //blocks everyone that's not executing, including main UI NSLog(@"=== B start ==="); [self taskName:@"TASK B" countTo:8 sleepFor:1.0f]; NSLog(@"=== B end ==="); }); NSLog(@"-- END --"); |

Now let’s dispatch_async first. Then we’ll dispatch_sync. What happens here is that:

1) prints — START —

2) we dispatch the block TASK A onto the concurrent queue, control returns immediately. prints === A start ===

3) Then we dispatch_sync TASK B on the same queue, it does not return and blocks because this task needs to complete before we relinquish control.

task B starts, prints === B start ===

The main UI is now blocked by Task B’s dispatch_sync.

4) Since Task A was executing before Task B, it will run along with B. Both will run at the same time because our queue is concurrent.

5) both tasks finish and prints === END ===

6) control returns ONLY WHEN Task B finishes, then Task B’s dispatch_sync returns control, and we can move on to the next instruction, which is log — END –.

output:

14] — START —

2016-08-25 14:26:13.638 sync_async[29186:3817447] === A start ===

2016-08-25 14:26:13.638 sync_async[29186:3817414] === B start ===

2016-08-25 14:26:13.638 sync_async[29186:3817447] — Task TASK A start —

2016-08-25 14:26:13.638 sync_async[29186:3817414] — Task TASK B start —

2016-08-25 14:26:14.640 sync_async[29186:3817414] TASK B – 0

2016-08-25 14:26:14.640 sync_async[29186:3817447] TASK A – 0

…

…

2016-08-25 14:26:21.668 sync_async[29186:3817447] TASK A – 7

2016-08-25 14:26:21.668 sync_async[29186:3817414] TASK B – 7

2016-08-25 14:26:21.668 sync_async[29186:3817447] ^^^ Task TASK A END ^^^

2016-08-25 14:26:21.668 sync_async[29186:3817414] ^^^ Task TASK B END ^^^

2016-08-25 14:26:21.668 sync_async[29186:3817447] === A end ===

2016-08-25 14:26:21.668 sync_async[29186:3817414] === B end ===

2016-08-25 14:26:21.668 sync_async[29186:3817414] — END —

Sync, Async, on Concurrent Queue

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

dispatch_queue_t queueA = dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_DEFAULT, 0ul); NSLog(@"-- START --"); // 1 dispatch_sync(queueA, ^{ // 2 NSLog(@"=== A start ==="); // 3 [self taskName:@"TASK A" // 4 countTo:8 sleepFor:1.0f]; NSLog(@"=== A end ==="); // 5 }); dispatch_async(queueA, ^{ // 6 NSLog(@"=== B start ==="); // 7 [self taskName:@"TASK B" countTo:8 sleepFor:1.0f]; NSLog(@"=== B end ==="); // 8 }); NSLog(@"-- END --"); // 7 |

If we were to run it sync, then async:

1) prints START

2) dispatch sync on serial Queue. The sync causes us to block, or NOT RETURN until task finishes. Thus, at this point UI is unresponsive.

3) prints === A start ===

4) Task A is executing.

5) prints === A end ===

6) Now, we dispatch another task via dispatch_async onto serial queue. Control returns immediately, and we move on to the next instruction, which is prints — END –. At this point UI is now responsive again.

7) Due to control returning immediately at 6) dispatch_async, we prints — END —

8) the task starts and prints === B start ===, and task B executes.

9) task B finishes, and we prints === B end ===

output:

— START —

=== A start ===

— Task TASK A start —

TASK A – 0

…

TASK A – 7

^^^ Task TASK A END ^^^

=== A end ===

— END —

=== B start ===

— Task TASK B start —

TASK B – 0

TASK B – 1

…

TASK B – 6

TASK B – 7

^^^ Task TASK B END ^^^

=== B end ===

Async on Serial Queues

However, if we were to use a serial queue, each task would finish executing, before going on to the next one.

Hence Task A would have to finish, then Task B can start.

|

1 2 3 4 5 6 7 8 9 10 11 |

dispatch_queue_t queue = dispatch_queue_create("Serial Queue", NULL); NSLog(@"-- START --"); // 1 dispatch_async(queue, ^{ // 2 NSLog(@" == START =="); // 4 [self taskName:@"TASK A" countTo:10 sleepFor:1.0f]; // 5 NSLog(@" == END == "); // 6 }); NSLog(@"-- END --"); // 3 |

1) prints — START —

2) dispatch task block onto serial queue via dispatch_async. Returns immediately. UI responsive.

3) prints –END — doe to execution continuing

4) prints == START == as this block starts to execute

5) Task A executes

6) prints == END == task block finishes

output:

— START —

— END —

== START ==

— Task TASK A start —

TASK A – 0

TASK A – 1

TASK A – 2

TASK A – 3

TASK A – 4

TASK A – 5

TASK A – 6

TASK A – 7

TASK A – 8

TASK A – 9

^^^ Task TASK A END ^^^

== END ==

Sync on Serial Queue

|

1 2 3 4 5 6 7 8 9 |

NSLog(@"-- START --"); // 1 dispatch_sync(queue, ^{ // 2 NSLog(@" == START =="); // 3 [self taskName:@"TASK A" countTo:10 sleepFor:1.0f]; // 4 NSLog(@" == END == "); // 5 }); NSLog(@"-- END --"); // 6 |

1) log — START —

2) puts the task block onto the serial queue via dispatch_sync. Does not return control until task finishes. Thus, UI and other queues is blocked.

3) log == START == as the task block starts

4) Task A executes

5) log == END == as the task block ends

6) Task block is finished, so dispatch_sync relinquishes control, thus UI is responsive again. log — END –.

— START —

== START ==

— Task TASK A start —

TASK A – 0

TASK A – 1

TASK A – 2

…

TASK A – 9

^^^ Task TASK A END ^^^

== END ==

— END —

Serial Queue – Async, then Sync

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

-(void)aMethod { dispatch_queue_t queue = dispatch_queue_create("Serial Queue", NULL); dispatch_async(queue, ^{ //queue up a task on queueA //task start [self taskName:@"TASK A" countTo:10 sleepFor:1.0f]; //task end }); dispatch_sync(queue, ^{ //queue up a task on queueA //task start [self taskName:@"TASK B" countTo:10 sleepFor:1.0f]; //task end }); } |

The correct situation is that the serial queue plans thread(s) to execute Task A and Task B one by one. The dispatch_async, and dispatch_sync’s effects are instantaneous:

1) dispatch_async Task A – Task A gets queued. The serial queue starts planning threads to work on this task A. Execution control continues because dispatch_async returns right away.

2) dispatch_sync Task B – Task B gets queued. The serial queue is working on Task A, and thus, by definition of a serial Queue, Task B must wait for Task A to finish before it continues. However, dispatch_sync’s effect is instantaneous and it blocks all other queues, main queues, and the tasks behind Task B from being queued.

Hence, the situation created by 1) and 2), we can see that Task A is being executed, Task B is waiting for Task A to finish, and the dispatch_sync is blocking all other queues, including the main queue. Thus, that is why your UISlider is not responsive.

output:

— START —

== START ==

— Task TASK A start —

TASK A – 0

TASK A – 1

TASK A – 2

TASK A – 3

TASK A – 4

…

TASK A – 9

^^^ Task TASK A END ^^^

== END ==

== START ==

— Task TASK A start —

TASK A – 0

…

TASK A – 9

^^^ Task TASK A END ^^^

== END ==

— END —

Serial Queue – Sync, then Async

The first sync blocks all queues, main queue, and other blocks behind itself. Hence UI is unresponsive.

Block A runs. When it finishes, it relinquishes control. Task B starts via dispatch_async, and returns immediately.

Thus, UI is NOT responsive when Task A is running. Then when Task A finishes, by definition of the serial queue, it let’s Task B runs. Task B starts via dispatch_async and thus, the UI would then be responsive.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

-(void)aMethod { //dispatch_queue_t queue = dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_DEFAULT, 0ul); dispatch_queue_t queue = dispatch_queue_create("Serial Queue", NULL); dispatch_sync(queue, ^{ //queue up a task on queueA //task start [self taskName:@"TASK A" countTo:10 sleepFor:1.0f]; //task end }); dispatch_async(queue, ^{ //queue up a task on queueA //task start [self taskName:@"TASK B" countTo:10 sleepFor:1.0f]; //task end }); } |

Nested dispatches

Async nest Async on a Serial Queue

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

NSLog(@"-- START --"); // 1 dispatch_async(queue, ^{ // 2 NSLog(@" -- OUTER BLOCK START -- "); // 4 [self taskName:@"TASK A" countTo:10 sleepFor:1.0f]; // 5 dispatch_async(queue, ^{ // 6 NSLog(@" -- INNER BLOCK START -- "); // 8 [self taskName:@"TASK B" countTo:10 sleepFor:1.0f]; // 9 NSLog(@" -- INNER BLOCK END -- "); // 10 }); NSLog(@" -- OUTER BLOCK END -- "); // 7 }); NSLog(@"-- END --"); // 3 |

1) prints — START —

2) dispatch async a block task onto the serial queue. It returns right away, does not block UI. Execution continues.

3) Execution continues, prints — END —

4) the block task starts to execute. prints — OUTER BLOCK START —

5) Task A executes and prints its stuff

6) dispatch async another block onto the same serial queue. It returns execution right away, does not block UI. Execution continues.

7) Execution continues., prints — OUTER BLOCK END –.

8) The inner block starts processing on the serial queue. prints — INNER BLOCK START —

9) Task B executes and prints stuff

10) prints — INNER BLOCK END —

Result:

— START —

— OUTER BLOCK START —

— END —

— Task TASK A start —

TASK A – 0

…

TASK A – 9

^^^ Task TASK A END ^^^

— OUTER BLOCK END —

— INNER BLOCK START —

— Task TASK B start —

TASK B – 0

…

TASK B – 9

^^^ Task TASK B END ^^^

— INNER BLOCK END —

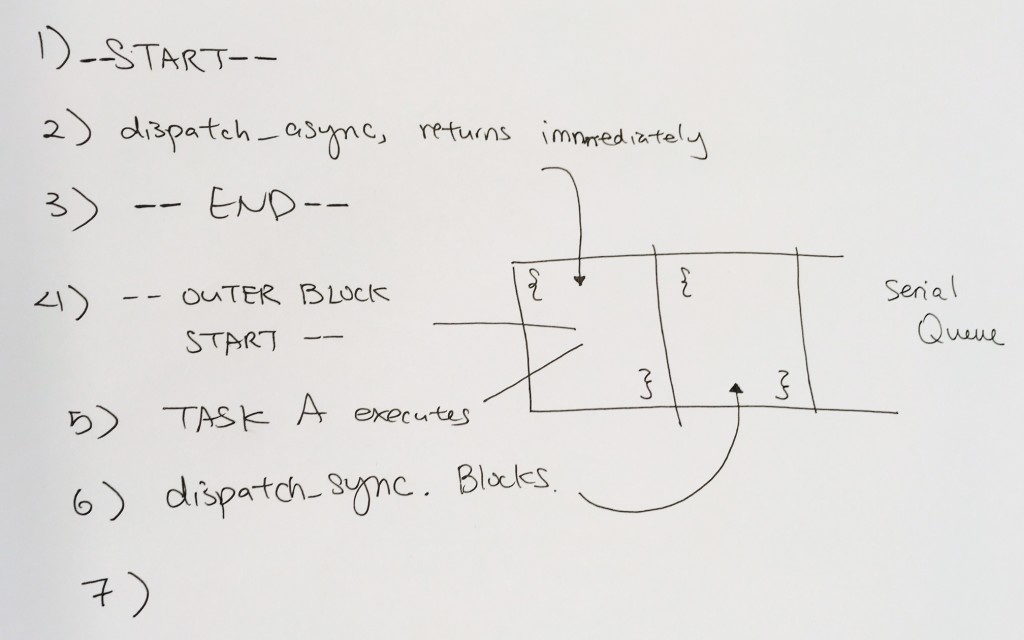

Async nest Sync on a Serial Queue – DEADLOCK!

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

NSLog(@"-- START --"); // 1 dispatch_async(queue, ^{ // 2 NSLog(@" -- OUTER BLOCK START -- "); // 4 [self taskName:@"TASK A" countTo:10 sleepFor:1.0f]; // 5 dispatch_sync(queue, ^{ // 6 NSLog(@" -- INNER BLOCK START -- "); [self taskName:@"TASK B" countTo:10 sleepFor:1.0f]; NSLog(@" -- INNER BLOCK END -- "); }); NSLog(@" -- OUTER BLOCK END -- "); }); NSLog(@"-- END --"); // 3 |

Notice that we’re on a serial queue. Which means the queue must finish the current task, before moving on to the next one.

The key idea here is that the task block that’s being queued at //2, must complete before any other tasks on the queue can start.

At // 6, we put another task block onto the queue, but due to dispatch_sync, we don’t return. We only return if the block at // 6 finish executing.

But how can the 1st task block at // 2 finish, if its being blocked by the 2nd task block at // 6?

This is what leads to the deadlock.

Sync nest Async on a Serial Queue

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

NSLog(@"-- START --"); // 1 dispatch_sync(queue, ^{ // 2 NSLog(@" -- OUTER BLOCK START -- "); // 3 [self taskName:@"TASK A" countTo:10 sleepFor:1.0f]; // 4 dispatch_async(queue, ^{ // 5 NSLog(@" -- INNER BLOCK START -- "); // 8 [self taskName:@"TASK B" countTo:10 sleepFor:1.0f]; // 9 NSLog(@" -- INNER BLOCK END -- "); // 10 }); NSLog(@" -- OUTER BLOCK END -- "); // 6 }); NSLog(@"-- END --"); // 7 |

1) log — START —

2) sync task block onto the queue, blocks UI

3) log — OUTER BLOCK START —

4) Task A processes and finishes

5) dispatch_async another task block onto the queue, the UI is still blocked from 2)’s sync. However execution moves forward within the block due to the dispatch_async

returns immediately.

6) execution moves forward and log — OUTER BLOCK END —

7) outer block finishes execution, dispatch_sync returns. UI has control again. logs — END —

8) log –INNER BLOCK START —

9) Task B executes

10) log — INSERT BLOCK END —

Async nest Async on Concurrent Queue

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

NSLog(@"-- START --"); // 1 dispatch_async(conQueue, ^{ // 2 NSLog(@" -- OUTER BLOCK START -- "); // 4 [self taskName:@"TASK A" countTo:10 sleepFor:1.0f]; // 5 dispatch_async(conQueue, ^{ // 6 NSLog(@" -- INNER BLOCK START -- "); // 8 [self taskName:@"TASK B" countTo:10 sleepFor:1.0f]; // 9 NSLog(@" -- INNER BLOCK END -- "); // 10 }); NSLog(@" -- OUTER BLOCK END -- "); // 7 }); NSLog(@"-- END --"); // 3 |

1) log –START–

2) dispatch_async puts block task onto the concurrent queue. Does not block, returns immediately.

3) execution continues, and we log — END —

4) queue starts processing the task block from //2. prints — OUTER BLOCK START —

5) Task A executes

6) dispatch_async puts another block task onto the concurrent queue. Now there is 2 blocks. Does not block, returns immediately.

7) prints — OUTER BLOCK END –, task block #1 is done and de-queued.

8) prints — INNER BLOCK START —

9) Task B executes

10) prints — INNER BLOCK END —

Async nest Sync on Concurrent Queue

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

NSLog(@"-- START --"); // 1 dispatch_async(conQueue, ^{ //2 NSLog(@" -- OUTER BLOCK START -- "); // 4 [self taskName:@"TASK A" countTo:10 sleepFor:1.0f]; // 5 // //which means --OUTER BLOCK END -- does not print until we're done! dispatch_sync(conQueue, ^{ // 6 NSLog(@" -- INNER BLOCK START -- "); // 7 [self taskName:@"TASK B" countTo:10 sleepFor:1.0f]; // 8 NSLog(@" -- INNER BLOCK END -- "); // 9 }); NSLog(@" -- OUTER BLOCK END -- "); // 10 }); NSLog(@"-- END --"); // 3 |

1) prints –START–

2) puts block task on concurrent queue. returns immediately so UI and other queues can process

3) since execution immediately returns, we print — END —

4) prints — OUTER BLOCK START —

5) Task A executes

6) puts another task block onto the concurrent queue. Return ONLY if this block is finished.

Note, that it DOES NOT RETURN only in current execution context of this block!

BUT OUTTER SCOPE CONTEXT STILL CAN PROCESS. That’s why UI is still responsive.

7) dispatch_sync does not return, so we print — INNER BLOCK START —

8) Task B executes

9) prints — INNER BLOCK END —

10) prints — OUTER BLOCK END —

Sync nest Async on Concurrent Queue

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

NSLog(@"-- START --"); // 1 dispatch_sync(conQueue, ^{ // 2 NSLog(@" -- OUTER BLOCK START -- "); // 3 [self taskName:@"TASK A" countTo:10 sleepFor:1.0f]; // 4 dispatch_async(conQueue, ^{ // 5 NSLog(@" -- INNER BLOCK START -- "); // 9 [self taskName:@"TASK B" countTo:10 sleepFor:1.0f]; // 10 NSLog(@" -- INNER BLOCK END -- "); // 11 }); NSLog(@" -- OUTER BLOCK END -- "); // 6 }); // 7 NSLog(@"-- END --"); // 8 |

1) logs — START —

2) dispatch_sync a block task onto the concurrent queue, we do not return until this whole thing is done. UI not responsive

3) prints — OUTER BLOCK START —

4) Task A executes

5) dispatch_async a 2nd block onto the concurrent queue. They async returns immediately.

6) prints — OUTER BLOCK END –.

7) The 1st task block finishes, and dispatch_sync returns.

8) prints — END —

9) prints — INNER BLOCK START —

10) Task B executes

11) prints — INNER BLOCK END —

2 serial queues

Say it takes 10 seconds to complete a DB operation.

Say I have 1st serial queue. I use dispatch_async to quickly throw tasks on there without waiting.

Then I have a 2nd serial queue. I do the same.

When they execute, the 2 serial queues will be executing at the same time. In a situation where you have

a DB resource, having ONE serial queue makes it thread safe as all threads will be in queue.

But what if someone else spawns a SECOND serial queue. Those 2 serial queues will be accessing the DB resource

at the same time!

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

dispatch_queue_t queue = dispatch_queue_create("Serial Queue", NULL); dispatch_queue_t queue2 = dispatch_queue_create("Serial Queue 2", NULL); dispatch_async(queue, ^{ //queue up a task on queueA //must finish this line [self taskName:@"TASK A" countTo:100 sleepFor:1.0f]; }); dispatch_async(queue2, ^{ //queue up a task on queueA //task start [self taskName:@"TASK B" countTo:10 sleepFor:1.0f]; //task end }); |

— Task TASK A start —

— Task TASK B start —

TASK B – 0

TASK A – 0

TASK B – 1

TASK A – 1

TASK B – 2

As you can see both operations are writing to the DB at the same time.

If you were to use dispatch_sync instead:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

dispatch_queue_t queue = dispatch_queue_create("Serial Queue", NULL); dispatch_queue_t queue2 = dispatch_queue_create("Serial Queue 2", NULL); dispatch_sync(queue, ^{ //queue up a task on queueA //must finish this line [self taskName:@"TASK A" countTo:100 sleepFor:1.0f]; }); dispatch_sync(queue2, ^{ //queue up a task on queueA //task start [self taskName:@"TASK B" countTo:10 sleepFor:1.0f]; //task end }); |

The dispatch_sync will not return until current task block is finished. The good thing about it is that the DB operation in serial queue ONE can finish

without the DB operation in serial TWO starting.

dispatch_sync on serial queue ONE is blocking all other queues, including serial queue TWO.

TASK A – 6

TASK A – 7

TASK A – 8

TASK A – 9

^^^ Task TASK A END ^^^

— Task TASK B start —

TASK B – 0

TASK B – 1

TASK B – 2

However, we are also blocking the main thread because we’re working on the main queue ! -.-

In order to not block the main thread, we want to work in another queue where it is being run concurrently with the main queue.

Thus, we just throw everything inside of a concurrent queue.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

dispatch_async(conQueue, ^{ dispatch_sync(queue, ^{ //queue up a task on queueA //must finish this line [self taskName:@"TASK A" countTo:10 sleepFor:1.0f]; }); dispatch_sync(queue2, ^{ //queue up a task on queueA //must finish this line [self taskName:@"TASK B" countTo:10 sleepFor:1.0f]; }); }); |

Our concurrent queue works on the main queue, thus, the UI is responsive.

The blocking of our DB tasks are done within the context of our concurrent queue. It will block processing there,

but won’t touch the main queue. Thus, won’t block the main thread.